Opportunities abound for sharing data “for good” – to turbocharge clinical trials, inform medical research, anticipate and better manage epidemics, and focus on individual health goals benchmarking oneself vs. peers.

At the same time, third party data brokers and marketing interests with which consumers have no direct connection of knowledge are scraping together bits of personal information from internet clouds, social networks, and retail data from which profits are made. And that value does not accrue to the very individuals whose data are being sold.

Here’s Looking At You: How Personal Health Information Gets Tracked And Used, published by California HealthCare Foundation on July 15, 2014, is my take on the emerging world of “small data” (consumer-generated data), Big Data, the great opportunities for data analytics for public and personal health, and the growth of the use of peoples’ “dark data.” The privacy issues around data flowing out of credit card swipes, social network check-ins, digital health trackers’ apps, and smartphone GPS geo-location functions are thorny especially for health — where HIPAA protections don’t extend.

The infographic illustrates scenarios of two consumers’ self-generated data that’s “out there.” Kate is an engaged patient managing her chronic disease by participating in a clinical trial and feeding her data into a patient portal; using a mood tracking app and checking in with peers on a health social network; refilling prescriptions, enjoying loyalty points from her favorite retail pharmacy; and, using a credit card on Amazon to buy books on her condition. A third party data analysis company aggregates Kate’s profile which is available to large employers, for a price. This could lead to employment discrimination, unbeknownst to Kate, who is looking for a new job.

In the second persona, Jermaine is a healthy nonsmoker who gets a blood test sent to a lab that’s screening for smoking so that he can participate in his employer’s wellness program and receive a discount on his health insurance premium. The wellness program compels Jermaine to sign an agreement that allows his lab data to be shared with a third party. He manages high cholesterol adhering to a medication regime of prescribed statins, and he chats on Facebook with a group of guys who talk about basketball and health. A third party data analytics firm mashes up his data and market-segments him into the category, “Ethnic Second City Struggler.” Jermaine is unaware that the bank to which he’s applied for a home mortgage has access to this market research.

These two stories are real and documented.

Deven McGraw of Manatt, Phelps & Phillips, a legal privacy expert, warns in the report that, “Digital dust can have health implications even if the ‘dust’ is devoid of actual health information. FICO and other ‘scores’ could have significant implications for consumers – arguably as significant as a score generated using health data,” she said.

Examples of that non-health data could be multi-late night check-ins on FourSquare from a bar, combined with retail receipts from fast-food restaurants and line-items for over-the-counter sleeping pills and cans of Red Bull.

When it comes to consumer-generated health data, there are also privacy risks where one’s information might eventually flow. The Privacy Rights Clearinghouse examined 43 popular mobile health and fitness applications between March and June 2013, half from Apple IoS and half Google Android. Researchers found that 39% of free apps and 30% of paid apps sent data to someone not disclosed by the developer either in the app or in any privacy policy.

HIPAA doesn’t pertain to a large bulk of these flows because they are generated outside of covered entities’ jurisdictions. In addition to addressing the privacy law holes in the Big Data/small data era, there are ways for people to mitigate their risks, beginning with developers and third party marketing firms making privacy policies more accessible, simple, and transparent. Consumers need more information on the basics of how we each leave digital footprints in daily life. There is indeed value in sharing personal data and organizations that can help people benefit from their personal data analytics — in sickness and in health alike — should clearly discuss benefits of sharing data for good and the value that can accrue to the consumer in kind. There are also emerging technologies for personal health data lockers and personal data clouds that could enable people to determine with whom and how they share their data.

On the upside, small and Big Data can and will drive public and personal health. Paul Wicks of PatientsLikeMe and Kipp Bradford, a biomedical engineer who is part of the “Maker Health” movement, are bullish on data analytics’ role in supporting a continuously-learning health system on a personal level, enabled through sensors, algorithms, and scientists working through a “command center” that monitors people and alerts us (and our caregivers, as required) in real-time to tweak behaviors – such as taking a medication, eating a particular food, taking a walk, or checking in with our doctors ASAP.

Think of this as applying the N of the many to the N of 1: as Bradford described, “when you do a clinical trial with 1,000 people, you’re taking 1,000 peoples’ physiologic measurements and generalizing that across a billion people. But if you can have a trial with 7 billion people, then you can understand the nuances of the effects on one person.”

David Goldsmith of Dossia called this “population health made personal.”

Health Populi’s Hot Points: Big Data creates Big Opportunities along with Big Challenges. Consumers could get much smarter (quickly!) in engaging more mindfully in social networks, using cash for certain purchases that one mightn’t want to be known by a third party, and making conscious reward and benefit-risk trade-offs in personal data sharing.

Technology developers and organizations using Big Data analytics could more transparently communicate consumer-friendly privacy policies, integrate security technologies that are available in the market today, and respect consumers’ data contributions – which are the lifeblood that must flow through algorithms to be useful.

Finally, policymakers need to realize and move on the fact that HIPAA isn’t relevant to this world, that’s moving ahead much more quickly than regulatory bodies have done.

Mikki Nasch of The Activity Exchange, who was involved in the formative development of the FICO Score, advises: “Being conscious about how you are generating data about yourself will quantify into behavioral attributes. This will be your good driving record in the future.”

My goal in writing this paper is to inspire all stakeholders in Big Data and health to be good drivers in the future.

I'm in amazing company here with other #digitalhealth innovators, thinkers and doers. Thank you to Cristian Cortez Fernandez and Zallud for this recognition; I'm grateful.

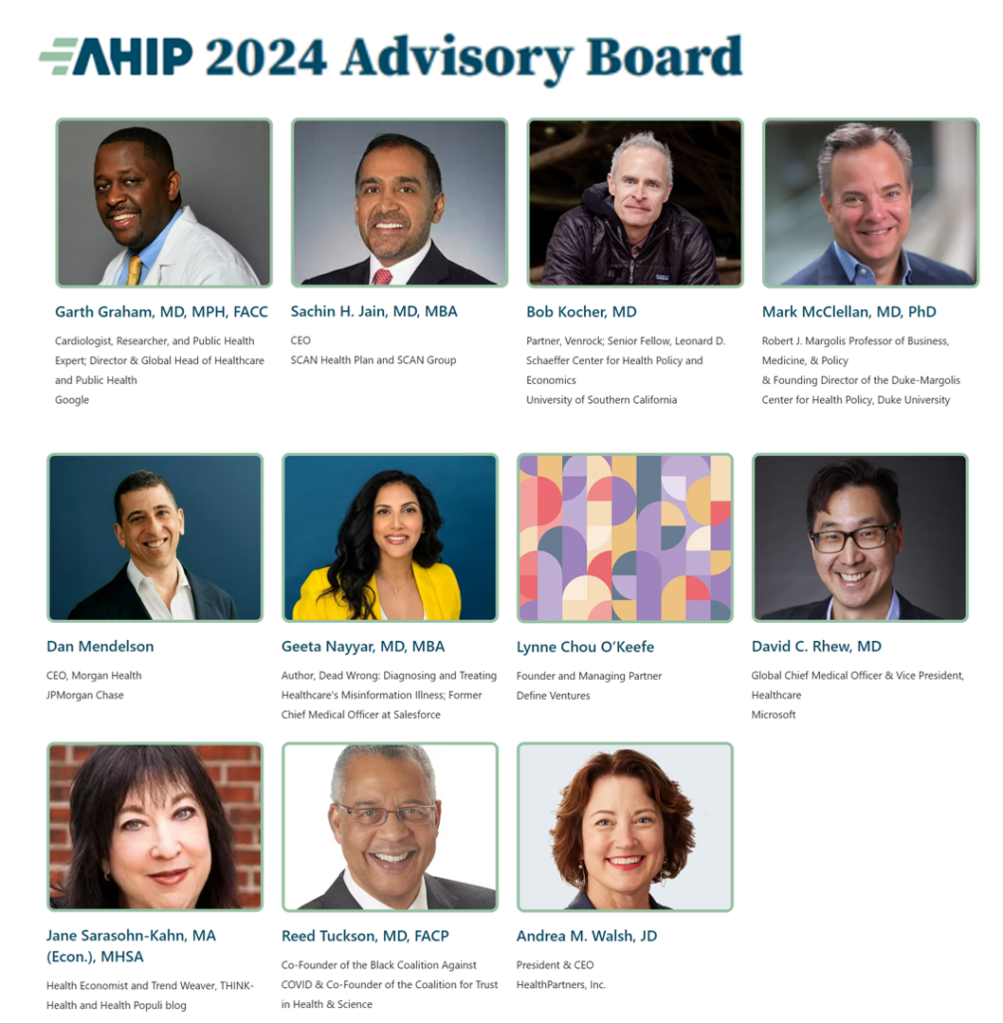

I'm in amazing company here with other #digitalhealth innovators, thinkers and doers. Thank you to Cristian Cortez Fernandez and Zallud for this recognition; I'm grateful. Jane was named as a member of the AHIP 2024 Advisory Board, joining some valued colleagues to prepare for the challenges and opportunities facing health plans, systems, and other industry stakeholders.

Jane was named as a member of the AHIP 2024 Advisory Board, joining some valued colleagues to prepare for the challenges and opportunities facing health plans, systems, and other industry stakeholders.  Join Jane at AHIP's annual meeting in Las Vegas: I'll be speaking, moderating a panel, and providing thought leadership on health consumers and bolstering equity, empowerment, and self-care.

Join Jane at AHIP's annual meeting in Las Vegas: I'll be speaking, moderating a panel, and providing thought leadership on health consumers and bolstering equity, empowerment, and self-care.