There’s a potential large obstacle that could prevent the full benefits of the current go-go, bullish forecasts for artificial intelligence (AI) to help make healthcare better: a decline in consumers’ willingness to share their personal data.

There’s a potential large obstacle that could prevent the full benefits of the current go-go, bullish forecasts for artificial intelligence (AI) to help make healthcare better: a decline in consumers’ willingness to share their personal data.

Along with the overall erosion of peoples’ trust in government and other institutions comes this week’s revelations about Facebook, Cambridge Analytica, and the so-called Deep State.

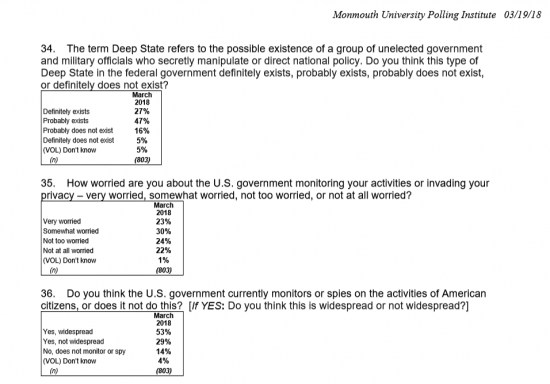

Three-fourths of Americans believe that some type of “Deep State” in the federal government exists, a new poll from Monmouth University published yesterday. I clipped the responses to three of the survey’s most relevant questions here. Not only do 74% of U.S. health citizens think a Deep State exists, but over 50% of people are worried that the U.S. government is monitoring them or invading their privacy.

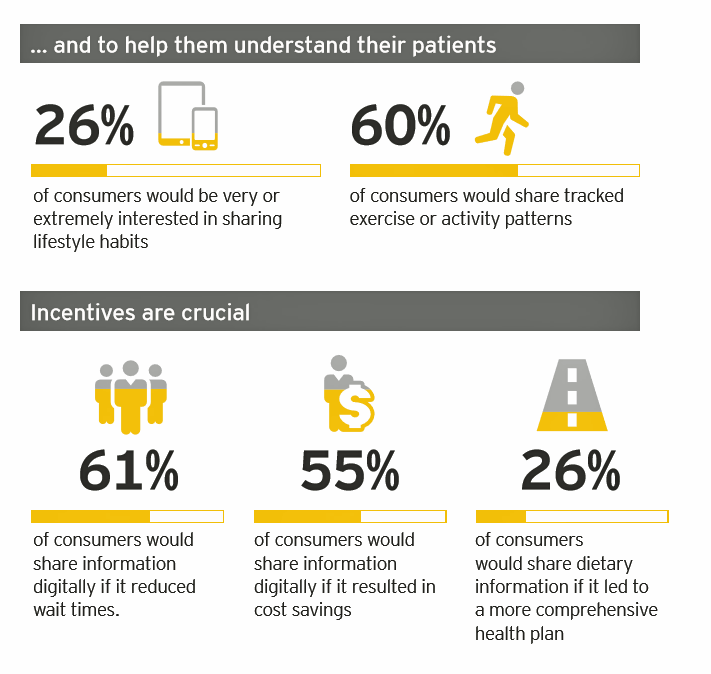

Add this data point to a new report from EY on health consumers noting that while most people would be glad to use some form of technology to interact with their clinicians, only 26% of them would be interested in sharing their lifestyle data. That is, unless consumers received incentives: 61% of people would seek reduced wait times, 55% would share personal data if it reduced their health care costs, and 26% of patients would look for a more comprehensive health plan in exchange for disclosing lifestyle data.

Add this data point to a new report from EY on health consumers noting that while most people would be glad to use some form of technology to interact with their clinicians, only 26% of them would be interested in sharing their lifestyle data. That is, unless consumers received incentives: 61% of people would seek reduced wait times, 55% would share personal data if it reduced their health care costs, and 26% of patients would look for a more comprehensive health plan in exchange for disclosing lifestyle data.

My third data point comes from a conversation I observed at HIMSS between Dr. Kyu Rhee, Chief Medical Officer of IBM Watson Health, Dr. Cris Ross, Mayo Clinic CIO, and Dr. James Madara, President of the American Medical Association. Jonah Comstock covered this meet-up here, and I refer to his quotes of the conversation.

“Fundamentally, health and healthcare are about trust,” Dr. Rhee said. “Data is such a natural resource and sharing data across multiple stakeholders requires trust…from the patient…from the provider and the payers, and all these different stakeholders that need to connect this data together to bring these insights out.”

Those insights come out of machine learning and AI — which need data to inform insights that help realize the promise of truly, deeply personalized medicine.

Without a robust data mine, which goes beyond the healthcare claims and medical system and reaches into peoples’ everyday lives – from the kitchen to the bathroom, the bedroom to the bar — the algorithms underpinning AI in healthcare won’t be informed by our 360-degree lives.

But trust comes before sharing, and Americans’ trust in institutions is eroding even more quickly in 2018. I observed eroding trust in healthcare earlier this year in the 2018 the Edelman Trust Barometer.

Health Populi’s Hot Points: This week’s revelations about Facebook, Cambridge Analytica, and the U.S. political system will contribute to Americans’ continued cynicism and concern for privacy. People, and especially wearing the hat of a patient, need to feel that their personal data are held by trusted stewards. Doctors have long played that singular trusted role for holding data in the patient relationship.

In the era of big data and machine learning in health care, “It is no surprise that medicine is awash with claims of revolution from the application of machine learning to big healthcare data,” a spot-on essay by Andrew Beam and Isaac Kohane asserted in JAMA last week.

“Deep learning algorithms require enormous amounts of data to capture the full complexity, variety, and nuance inherent to real-world images,” they recognized. But these systems aren’t magic devices that can “spin data into gold,” they wrote. They were addressing the fairness of algorithms, raising the clichéd but still-true notion of “garbage in, garbage out.”

That “garbage” morphs into pearls when patients share lifestyle and very-personal data. But without trust, that sharing erodes and chills. And with it, the Holy Grail that techno-optimists expect AI will bring to healthcare.

Thank you FeedSpot for

Thank you FeedSpot for